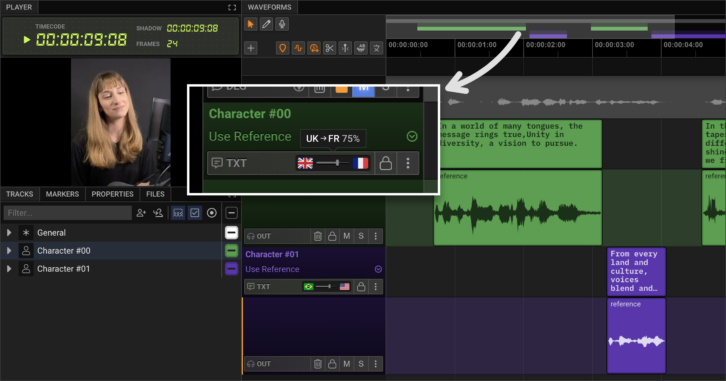

AI-based audiovisual dubbing and language localisation company Deepdub has announced the release of what it describes as the first-of-its-kind Accent Control technology.

The proprietary generative AI tech allows the accents of characters to be retained or modified when dubbing content into another language.

In traditional dubbing processes, directors must decide whether the localised performance should preserve the original accents or if they should be adapted in some way to make the content more suited to the target audience.

Deepdub’s Accent Control technology leverages custom generative AI models to manipulate and control the accents of characters in dubbed content, said the company.

Accent Control is powered by the company’s emotional text-to-speech (eTTS) 2.0 model, a multimodal Large Language Model that supports over 130 languages.

Deepdub’s research team developed the multilingual model to generate emotionally expressive speech, discovering capabilities that enabled the manipulation of various speech characteristics including accents. These capabilities were developed into Accent Control, which enables precise control to apply and adapt accents across all 130+ languages, said Deepdub.

The company added that it is currently working on expanding these capabilities to support regional accents, enabling micro-localisation.

Accent Control will be first used in collaboration with MHz Choice to dub international shows for North American audiences. MHz streams its content on Amazon Prime and Apple TV.

“Audiences crave genuine experiences and our Accent Control technology marks a significant milestone in achieving that,” said Ofir Krakowski, CEO and co-founder of Deepdub.

“It reflects our commitment to breaking down language barriers while respecting and preserving the cultural essence of content. This innovation not only enhances the viewing experience but also underscores our leadership in AI-driven localisation solutions.”