During its upcoming Production Technology Seminar, the European Broadcasting Union will be revealing more details of its proof of concept around using remote production for real-time animation.

As part of the IBC Accelerators programme, the proof of concept team developed a solution that explores a standardised pipeline that enables users to employ everyday technology such as smartphones and web cams as well as real-time render engines to create 3D animated content quickly and collaboratively.

The project has been led by the EBU’s senior project manager Paola Sunna alongside RTÉ motion graphics designer Ultan Courtney. Early last year they came up with the idea of building and designing a low-cost pipeline for computer generated characters, leveraging game engines, AI tools and off the shelf equipment.

“We got in touch with other broadcasters, including RAI, YLE, and VRT and told them about the project and what we wanted to try and test,” explains Sunna. “They came on board and then we submitted a proposal to the IBC Accelerator programme, it was accepted and so we started working with other champions and participants.”

Just before the team submitted their PoC, Nvidia launched Omniverse, a scalable, multi-GPU, real time reference development platform for 3D content creation. “So we agreed with the other broadcasters to develop two pipelines,” continues Sunna, “one based on the beta version of Omniverse, and the other based on Unreal Engine. The team at RAI focused mostly on the Omniverse platform, which includes a solution called Machinima that is for cinematics, so animation, manipulation of the CG characters using high-fidelity renders. They also used Audio2Face, which allows you to generate facial animation from just an audio source. You record the audio clip and it’s used to animate the face of the CG characters.”

The team wanted to decentralise the production pipeline by relying on what audiences had already in their homes or have access to. “A lot of our project, believe it or not, involves saying no to potentially better and more expensive technology,” states Courtney. “By sort of building on the model of almost having a connected series of home offices, we were able to connect all of the artists who were working internationally on the project. Often you’re expected to have a kind of generalist skill set when you work with a broadcaster and some of this required some specialist knowledge. *But at the same time, we also needed almost to have access to a few specialists and when we came together we realised we had everything we needed to form a very well educated team.”

The focus on collaboration and remote workflow meant the team was spread right across Europe, with 3D modellers in Belgium, an FX artist and vocal performer in Finland, innovators in Italy, and a sound designer in Ireland. “One of the team at the EBU set up a live working mechanism for cloud based workflow through a system called Perforce,” adds Courtney, “RTÉ were directing and doing performances as well and then RAI were testing tools and refining and cleaning motion capture data.

“Even though we all had a generalist skill set, each one was able to kind of push it slightly enough towards a specialisation without it turning into a full Hollywood pipeline. It was essentially trying to find a way to create a usable pipeline within the skill sets of broadcasters and also making it as accessible as possible to talent who would be digitally captured and then perform using their voices.”

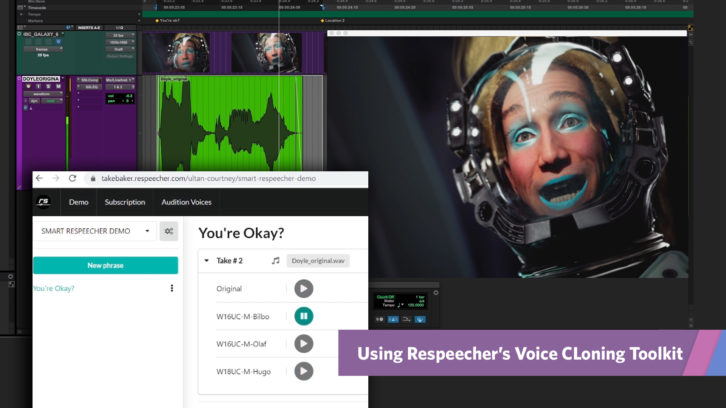

Once recorded, the voices were entered into a solution called Audio2Face which was employed in the Omniverse pipeline. “We were able to take in people’s voice data, it would animate the face and then we were also able to use a participating company called Resepeecher to change the timbre and character of that voice and effectively use one voice actor to do multiple characters,” continues Courtney. “That’s quite important because once we’re able to clone someone’s voice and change it from one character to another, we’re able to get more performances out of a single person.

“All of this was basically trying to find a way of using technology that’s local, that’s abundant, and use it to multiply creativity and multiply the ability to facilitate more content being created with off the shelf equipment,” he adds.

While vendors had input into the project, Courtney says it was important to be able to drive it forward as a team of broadcasters. “There was quite a lot of power in that because of the engagement we had through all of these countries working together and rallying around one piece of content,” he adds. “I think that’s something that really helped was the fact that because we had a treatment, and then a script, we were really making an actual piece of content that could be broadcast and it tested the pipeline in a way that was so much further beyond being in a lab for an hour and seeing how it worked. It was really challenging, especially to do twice, using the Omniverse pipeline and the Unreal pipeline. Each had a different set of tools available.”

Courtney adds that this project has been a real labour of love for everyone who worked on it, with many working on the pipeline while also working on their ‘proper jobs’. “I spent 141 days on this, in addition to my full time job,” he says. “Everyone was giving up their time. Not only did we feel like we were at the front of something new, we put everything we had behind it in such an International capacity.”

So far, the pipeline has been used on non-live content but the next stage is to take a look at live production possibilities. “We want to look at that for the second phase of the work, and the EBU is also thinking about establishing a working group to focus on CG animation in real time for live shows, so that if the quallity is satisfactory, broadcasters can add CG characters into live shows,” states Sunna.

“We’ve had colleagues from other broadcasters, not involved in the IBC Accelerator, who are very interested in understanding what we’ve been doing, so maybe we can work with them on the next part of the project. The idea is to create a working group, which we’ll open to industry professionals because we need that. There’ll be a little bit shifting the focus to a real time live animation pipeline. We have to find maybe one or more broadcasters keen to test these new pipelines during a live show.”

The team will be discussing their work as part of the EBU’s Production Technology Seminar between 1st – 3rd February. More details are available here.