Epic Games has released the second volume of its Virtual Production Field Guide, a free in-depth resource for creators at any stage of the virtual production process in film and television.

The publication offers a deep dive into workflow evolutions including remote multi-user collaboration, new features released in Unreal Engine, and interviews with industry leaders about their hands-on experiences with virtual production.

Included in the interviews is a Q&A with London-based visualisation studio Nviz, whose credits include Devs, Avenue 5, The Witcher, Solo, Life, Hugo, and The Dark Knight. In this exclusive extract, CEO Kris Wright and chief technology officer Hugh Macdonald share the how Unreal Engine is helping to drive innovation across previs, techvis, stuntvis, postvis, simulcam, graphics, and final imagery.

How long have you been incorporating Unreal Engine into your work?

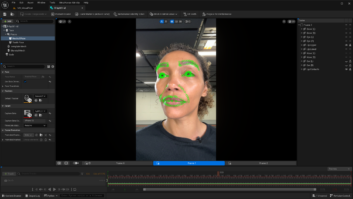

Hugh Macdonald: Unreal Engine has been in our previs pipeline for about three years and in the simulcam pipeline for the last year. Prior to that, we were using Ncam’s own renderer, which uses MotionBuilder. We did a job last year that was heavily prevised in Unreal and we did simulcam on set with it, including facial motion capture of CG characters. We did postvis in Composure in Unreal Engine as well.

What led to your adopting Unreal Engine for virtual production?

HM: It was a combination of having previs assets already existing in Unreal Engine plus the visual fidelity, the control that you get, and the need to do facial motion capture. We felt Unreal was the way to go for this project, because of Epic’s film experience coming from people like Kim Libreri [CTO at Epic Games]. Kim gets the visual effects world. Epic is a company that understands the different sectors and different challenges. Having a CTO who really knows our world inside out is really encouraging for what it could mean for the engine in the future.

What are you typically visualizing with simulcam?

HM: Eighty per cent of the time, it’s environments. It might be creatures or vehicles in the background, but most of the time it’s environments. The main benefit there is to give the camera operator and director the ability to get an understanding of what the shot’s going to be like.

So you might be framing a character in the foreground and there’s going to be a big CG building behind them. Without simulcam, you might cut off the top of the building or have to shrink the building in that one shot just to make it look right. The key is the ability for everyone on set to have an understanding of exactly what the shot will look like later, over and above the previs and the storyboards.

What kind of data do you need to make simulcam work?

HM: We record all the camera data and tracking data, which includes the camera’s location within the world. So you know in post where the camera was and how that syncs up with the animation that was being played back at the time to the original footage. We also generate visual effects dailies as slap comps of what was filmed with the CG that was rendered.

How do you track the set itself?

HM: It doesn’t require any LiDAR or pre-scanning. We use Ncam, which builds a point cloud on the fly. Then you can adjust the position of the real world within the virtual world to make sure that everything lines up with the CG. It’s a slightly more grungy point cloud than you might get from LiDAR, but it’s good enough to get the line-up.

On a recent project, we had a single compositor who would get the CG backgrounds from the on-set team and the plates from editorial and slap them together in Nuke. Generally, he was staying on top of all the selects that editorial wanted every day, to give a slightly better quality picture.

Kris Wright: And because it’s quite a low-cost postvis, it was invaluable for editorial. Some of that postvis stayed in the cut almost until the final turnover. So it was a way to downstream that data and workflow to help the editorial process, it wasn’t just about on-set visualization.

How does switching lenses affect simulcam?

HM: With Ncam, the lens calibration process will get all the distortion and field of view over the full zoom and focus range including any breathing. If you switch between lenses, it will pick up whatever settings it needs to for that lens. If a new lens is brought in by the production, then we need about twenty minutes to calibrate it.

How has your workflow adapted to the increased need for remote collaboration?

HM: We currently have the ability for a camera operator or a DP or director to have an iPad wherever they’re located, but have all the hardware running a session in our office. You can have a virtual camera system tech controlling the system from wherever they are. Then you can have a number of other people watching the session and discussing the set at the same time.

We see remote collaboration as very important even without everything going on with the current lockdowns, for example, in a scenario where the clients can’t get to our office because they’re on a shoot elsewhere and it’s too much hassle. To be able to do this remotely, to have multiple other people—whether it’s the effects supervisor, previs supervisor, editor, or anyone else—watching the session and able to comment on it and see what’s going on with an understanding for it, this gives us much more flexibility.

KWt: The current solutions that I’ve seen are people on a Zoom call or Google Hangouts saying, “Oh, can you move the camera a bit here? And now there.” We wanted to iterate on from that. Everybody has an iPad, which they can now shoot all visualization and previs with. We try to keep it as low-footprint as possible and software-based. Literally, you go on a phone, we’ll put on the software and this is what you need to download. Everybody else can view it in a virtual video village.

Where do you see your workflows with Unreal Engine evolving in the future?

HM: The visual fidelity coming in UE5 is going to be amazing and a massive leap forward. More people are going to embrace in-camera visual effects like those in The Mandalorian. It’s going to become more accessible to more productions. We’re going to start seeing a lot more dramas and period pieces using the technology.

There are going to be a lot more live events productions using it, such as concerts. What’s been announced is about the visual fidelity of the lighting and the geometry. Unreal Engine is going to get used more and more for seamless effects.

KW: One of the really exciting things is the application base. We find the virtual art departments now embracing this, and production designers are now using VCam to show their sets before they even go to previs. I’ve even heard of grips and gaffers bringing people into virtual sessions to move assets around. Epic’s really doing this right, and has seen the bigger picture: not only the image fidelity, but also how this tool, because of its real-time aspect and iterative processing, can help decision-making across all parts of filmmaking.

The full version of Epic Games’ Virtual Production Field Guide is available to download here.