There’s been a quiet revolution in television sound, and it’s all about the objects.

Object-Based Audio (OBA) doesn’t just open doors for people to experience audio content in new ways; it kicks them down and sounds amazing while doing so.

It gives content producers more freedom to generate a range of audio experiences for their customers, and earlier this year the Eurovision Song Contest in Liverpool marked a milestone in the way it can be produced and delivered.

OBA works by encoding audio objects with metadata to describe what it is and how it contributes to a mix. An audio object can be anything; it can be an instrument, a commentator, or a crowd mix. It enables content providers to deliver personalised and immersive listening experiences to customers – also known as Next Generation Audio (NGA) – and a receiver in the consumer’s equipment decodes the metadata to ensure the mix is rendered as the producer intended it to be.

The Audio Definition Model (ADM) was published in 2014 by the EBU and became an ITU Recommendation in 2015 (ITU-R BS.2076). It is an open standard that all content producers can use to describe NGA content. As an open model, it can be interpreted by both Dolby’s AC-4 and Fraunhofer’s MPEG-H, and anyone else who wants a piece of the action as encoders evolve.

“File-based ADM is reasonably well adopted now,” says Matt Firth, project R&D engineer at BBC R&D in Salford, UK, and part of the Eurovision S-ADM trials. “ADM is designed to be an agnostic common ground between NGA technologies to allow programme makers to produce something in ADM and not worry about the format that the content needs to be in for a particular emission route, whether that is AC-4 or MPEG H. If your content is in ADM, you can feed that into the encoder and it’s the encoder’s job to put it into whatever propriety format it supports and deliver it.”

If it is so well adopted, you might ask, why can’t I already personalise what I hear at home and just listen to just the football crowd if I want to?

Well, for live content we’re not there yet. ADM doesn’t work in real-time workflows like live broadcast, which requires a frame-based version of ADM.

Serial ADM, or S-ADM, is exactly that. Established in 2019 and standardised in ITU-R BS.2125, S-ADM provides metadata in time-delimited chunks and delivers them in sequence alongside the audio. In other words, it enables use of ADM metadata in real-time applications.

While companies such as Dolby have been experimenting with S-ADM, there are still gaps to fill and workflows to develop, and the Eurovision trial was a massive step forward. As the first-ever BBC trial using S-ADM, it allowed Firth and his BBC colleagues to build new workflows, identify gaps, and glue the broadcast infrastructure together.

“It required the development of software to fill those gaps in the chain, but as we knew exactly what feeds we were getting, as well as the ADM structure that the emission encoder required, we were able to quickly create the software to do that conversion.”

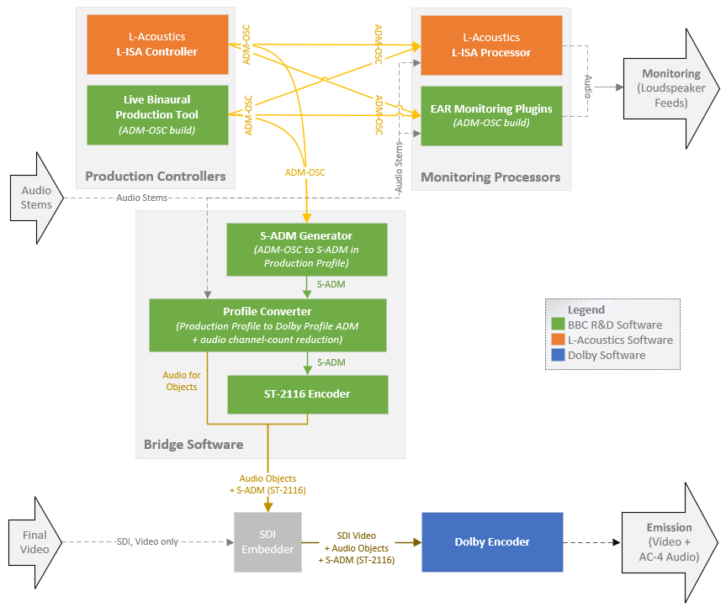

The BBC’s listening room in Salford received 62 channels from Liverpool over MADI, including music, presenters, FX and a variety of audience microphone feeds. The production was handled by L-Acoustic’s L-ISA Controller software, and the L-ISA Processor software was used for local monitoring.

“The L-ISA Controller allows a sound producer to set position and gain parameters for each of the input audio channels from a straightforward user interface. L-ISA supports ADM-OSC, which is a method of conveying ADM metadata using the Open Sound Control protocol.

“It presents a hierarchical path to a control or a parameter and defines a value for it. It’s really simple, making it really easy to implement. In the Eurovision project, we used ADM-OSC to refer to a particular audio object, and then to a parameter for that audio object, such as 3D position or gain. It makes it a nice solution for production controllers like L-ISA, and as long as the receiving software is also speaking ADM-OSC, it is easy to interoperate.”

To test interoperability, Firth adapted BBC R&D’s Live Binaural Production Tool ─ originally developed for the BBC Proms ─ to output ADM-OSC as an alternative production controller. He also built an alternative monitoring solution based around the EBU ADM Renderer (EAR) which also supports ADM-OSC; both controllers were able to function with either monitoring solution.

In addition, both the L-ISA Controller and the alternative production controller were able to connect to an S-ADM generator which BBC R&D built to convert the ADM-OSC to S-ADM. Using ADM-OSC to generate S-ADM in a live production broadcast chain was a world first for the team and another cause for celebration; it proved that any software which can generate ADM-OSC can be used in the production set-up.

“We also wanted to make sure that different implementations of ADM can talk to each other. For example, our own EAR Production Suite software uses a very unconstrained version of ADM known as the production profile which is very flexible in the number of audio channels you can use, similar to the ADM produced from the production controllers in this trial. The problem is that emission codecs are more constrained based on the bandwidth they have available and the processing capabilities of consumer devices, so we developed a processor to squeeze all 62 channels down to just 12 channels into the encoder.

“These are conversion steps which we need to formalise as an industry so we can agree on how a large production might work. At the production end, you don’t want to constrain yourself to a particular codec because if you want to use another codec in the future, you don’t want to have to recreate your content to take advantage of those extra features.

“But if you already have an ADM structure in the production profile, you can just pass it straight through a conversion process and take advantage of those features straight away.”

That delivery from the production environment to the Dolby AC-4 encoder was achieved using SMPTE’s ST-337 and ST-2116 standards; ST-337 describes how to encode generic data into a PCM audio channel (specifically for AES3), and ST-2116 describes how you should use ST-337 specifically for S-ADM metadata.

In the Eurovision setup, the first 15 output channels of the transport were available for audio and a 16th carried the compressed S-ADM metadata.

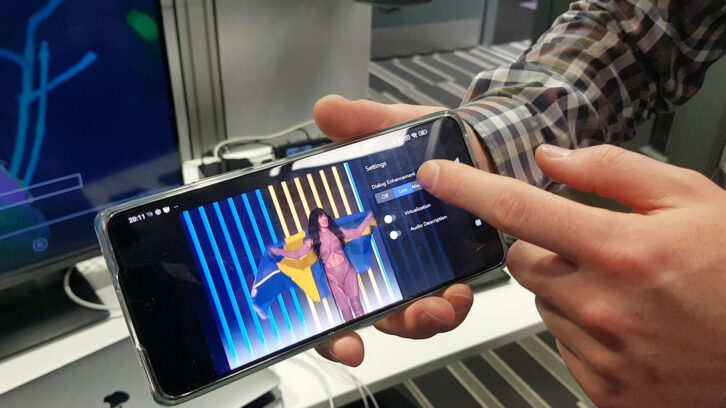

“This interfacing between our S-ADM generator and Dolby encoder system had never been tried before, so it was a pleasant surprise when it worked first time!” says Firth. “Among the 62 audio channels fed into the lab were the BBC One and BBC Radio 2 commentary feeds. This enabled us to provide the option to choose between these commentaries using the user interface on the TV or a smartphone in our test environment. The rest of the audio objects were mixed down to a 5.1.4 channel-based object, providing an immersive experience on capable devices.”

On a stereo television the programme was rendered in stereo, but adding an Atmos-enabled soundbar provided a full immersive experience, which further demonstrated how the content adapts to different devices.

While producing live OBA content using S-ADM is still in its infancy, the BBC Eurovision tests made some huge steps, not only proving the concept but joining many of the dots between devices and illustrating flawless interoperability between different systems.

Read more of Kevin’s interview with Matt Firth in the August issue of TVBEurope.