All new broadcast technologies bring benefits, but the focus is often on those that enhance the viewing or listening experience in terms of entertainment or impact. More practical and assistive features, such closed captions and audio description, have largely been seen as necessary but designed for a very specific section of the audience. That attitude is shifting, as shown by Next Generation Audio (NGA) being promoted more for its personalisation capabilities than immersive sound.

NGA systems are based on the concept of object-based audio (OBA), which has a foundation of traditional audio channels (5.1 or 7.1) but with up to 128 ‘objects’. These individual audio elements can be either positioned in different parts of a soundscape to create an immersive experience or used to represent specific components of a signal, such as speech/commentary and background sounds. Their exact positioning or function is defined by accompanying metadata.

The two main NGA formats, MPEG-H Audio and Dolby AC-4, both utilise OBA for immersive audio – MPEG-H 3D Audio and Dolby Atmos respectively – and personalised and accessibility functionality. The last two features can be used for selecting a specific commentator on coverage of a football match or a particular language (personalisation); and enabling a hard of hearing person to change the balance between speech and sound effects/music on a drama soundtrack (accessibility).

While the movement and all-encompassing effects of immersive audio are the obvious pull for feature films, there is the view – particularly among NGA developers – that personalisation and accessibility are the real point of OBA. “For us, it is the combination of personalisation and immersive that is most valuable,” comments Harald Fuchs, head of media systems and applications at Fraunhofer IIS, the primary developer of MPEG-H Audio. “You can take sounds from an event and create a nice 5.1.4 or 7.1.4 mix, which is the object side of audio capture. The next step is to leave those objects separate with some metadata; you take account of them in your mix but you don’t actually mix them into your 5.4. The next step will be where you have immersive audio but an immersive channel plus objects and you can even add some personalisation to it.”

It is in this expanded, combined mode that Fuchs sees MPEG-H Audio offering advantages and opportunities in the future. “If you only focus on the immersive and channel mix, it’s basically only a fraction of the features of the codec,” he says. “But I think it’s an important step towards the future because on the sound production side, people first have to learn to use the new methods and still create some nice mixes. As a next step they can go beyond that and use things for a personalised immersive experience.”

Testing MPEG-H Audio

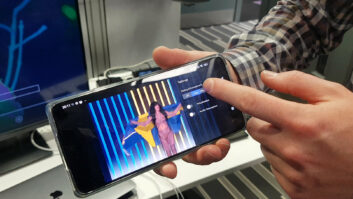

Such combinations have featured in the MPEG-H Audio tests Fraunhofer carried out in the two years leading up to the pandemic. The system was tested at two Eurovision Song Contests, in 2018 and 2019, presenting the event with immersive audio but also more practical additional features. Fuchs comments that the challenge was how such a production could work with music/effects, the international sound in a 5.4 production, plus dialogue and the commentaries as separate objects offering personalisation, such as dialogue enhancement or commentary selection and languages.

“We have also done this with some sports events, for example with France Televisions on the French Open,” he says. “That was in 2019, on air during the whole tournament as a DVB-2 UHD service in Paris where they had the standard stereo sound, then a second audio track in MPEG-H with two commentaries and the 5.4. It was a complete, semi-automated live production that they could switch between when there was no ongoing live broadcast to pre-recorded content.”

The personalisation aspects of MPEG-H Audio include the ability to select a specific commentary for a sports match (the choice could not only be between the TV or radio commentators but also fans commentating), plus the potential to choose which end of a ground the crowd noise comes from (the home end or the away end). While this gives viewers more choice and flexibility in what and how they watch, OBA has other benefits when it comes to assistive listening.

We can’t hear you

Increasingly, people of all ages are now experiencing hearing problems for various reasons. Broadcasters have seen the number of complaints about programmes being difficult to understand increase over the last ten years. At the end of 2020 Fraunhofer IIS, in conjunction with German regional public broadcaster WDR, published a survey that found 68 per cent of the viewing audience across all demographics frequently, or very frequently, had problems understanding speech on TV. Because of this many people now resort to switching on the subtitles if they cannot hear the dialogue properly.

Several OBA developers, including the BBC, have worked on techniques that provide the viewer or listener with the ability to change the balance between the speech and background effects and/or music. The system for this within MPEG-H Audio is Dialog+, a production technology that makes it possible for loudness levels to be adapted for both speech and background sound. To do this Dialog+ uses deep learning, which can only be utilised once the final mix has been completed. In this way it is possible to create custom speech levels that meet specific personal needs.

In all the different aspects of MPEG-H production, Fuchs says metadata is very important. “It needs to be tightly time aligned with the audio signals,” he says. “As a start, in existing SDI infrastructures we wanted to be able to use one of the audio tracks for the metadata delivery to the encoder. We have some kind of modulated signals that carry the metadata and from the encoding point of view it’s the same input interface. The encoder just reads the metadata and acts accordingly. It’s kind of remote controlled through production, so encoding is not more complicated than in the past. On the output, for emission, it’s a single bit stream. This means emission systems don’t really need to change. It’s one elementary stream for MPEG-H that carries whatever is produced, immersive with objects.”

MPEG-H Audio has been implemented in Brazil but is yet to be adopted by European broadcasters. While there is always the more high profile option of Dolby, the potential of the personalisation and assistive features within MPEG-H could see it being embraced as appreciation of what the non-immersive features can offer grows within the broadcast market.