During this year’s Springwatch, the BBC R&D team used artificial intelligence to monitor the remote cameras used to film the show’s wildlife stars.

The R&D team applied a an object recognition system to the video captured by the Springwatch cameras. It is designed to detect and locate animals in the scene, which was based on the YOLO neural network that runs on the Darknet open-source machine learning framework.

The team trained a network to recognise both birds and mammals, which can run at just fast enough to find and then track animals in real-time on live video. The data generated by this tool meant the team was confident that an animal, rather than some other moving object, is present in the scene. Using this machine learning approach, the R&D team were able to move from knowing ‘something has happened in the scene’ to ‘an animal has done something in the scene’.

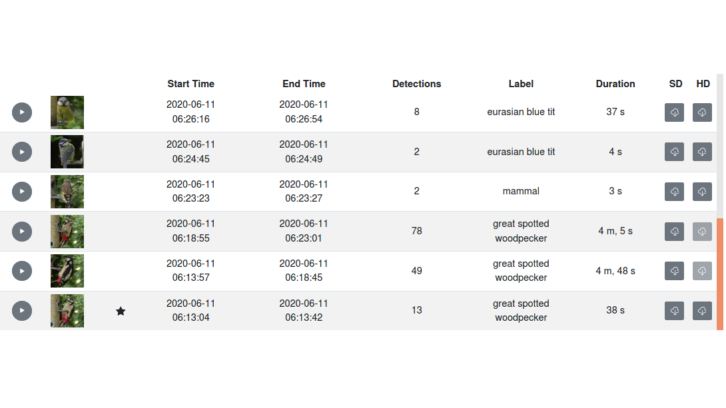

In a blog post, the R&D team said combining the motion and animal detection tools allows them to detect ‘events’ in the video where animals enter or move around the scene. “We store the data related to the timing and content of the events, and we use this as the basis for a timeline that we provide to members of the production team,” said Robert Dawes, senior research engineer, BBC R&D.

The production team can then use this timeline to navigate through the activity on a particular camera’s video. “We also provide clipped up videos of the event, one a small preview to allow for easy reviewing of the content and a second recorded at original quality with a few seconds extra video either side of the activity. This can be immediately downloaded as a video to be viewed, shared and imported into an editing package,” added Dawes.

Images are also extracted from the video to represent the animals that have been detected in the event and presented to the user. The images are also passed onto to a second machine learning system which attempts to classify the animal’s species. “The image classification system uses a convolutional neural network (CNN), specifically the Inception V3 model. If the system is confident of an animal’s species, then it will label the photograph and also add the label to the user interface. Adding this extra layer of information is intended to make it easier for a user to find the videos they’re looking for – particularly if one camera could have picked up multiple different species,” said Dawes.

The R&D team said they are working on making the system more intelligent so that it can provide more useful information about the clips it makes. It intends to test the system again by for Autumnwatch later this year.

You can read the full bog post here.