By now, most of us know that remote production involves an IP network based on the open-standard SMPTE ST 2110 protocol that handles reliable, high-quality and, most importantly, wide-area (WAN) data traffic with ease. Satellite uplinks are avoided as they are taken to be less reliable.

In a remote production scenario, a broadcaster or service provider sends a skeleton crew to the location of the event they want to cover. Ideally, the on-site crew is armed with a ‘flypack’, cameras and microphones. The benefits of this approach are lower travel expenses and the avoidance of resource-planning nightmares caused by in-lieu arrangements for key team members.

Although remote production is chiefly associated with sporting events – football, rugby, American football, golf, skiing, and athletics in all its shapes and guises – entertainment shows, election-night coverage, etc., are also increasingly produced with a remote workflow.

Ingredients

Depending on the workflow and budget, the on-site crew installs the cameras, microphones, a few monitors, headphone amplifiers, and props needed for interviews, and such. Those in charge of setting everything up may not be the ones who operate the cameras during the event. But they are still needed to monitor what needs to be checked locally, and to reboot or otherwise troubleshoot unexpected issues.

The cameras and microphones on location are connected to so-called video and audio gateways, i.e., 19” boxes sporting SDI inputs and outputs (for cameras) or a selection of microphone and digital inputs/outputs (for audio). Both the video and audio gateway(s) have at least one port that is connected to a network switch. Two network ports would be preferable for redundancy reasons.

The switches (again two) are connected to a fibre-optic line whose bandwidth and service (private dark fibre or public line) can usually be selected. Traffic to and from the switch is bidirectional as most gateways both transmit and receive streams.

That is a good thing: cameras need to receive video for monitoring purposes, and the on-site crew also needs audio instructions. In a remote production setup, the intercom system is connected to the same IP network and also transmits and receives via IP (RAVENNA and/or AES67). In addition, an mc² console’s talkback functionality is leveraged to provide the on-site troops with instructions regarding microphone and camera placement, etc.

So much for the venue, the signals ingested there are transmitted to the production hub that is located elsewhere. A simple explanation of how a remote production approach differs from the traditional outside broadcast workflow could be: that the team members who habitually sit in an OB truck to cover an event are the ones who are not on-site in a remote scenario. The director, camera shaders, slow-mo operators, audio supervisors and vision engineers work from the production hub, processing the signals received from the venue and controlling cameras, audio devices and other tools over the wide-area network that links the production hub to the venue, and vice versa.

IP-based remote production not only involves video and audio, but also control and increasingly metadata. Control data allow for convenient CCU (camera control unit) control, and most parameters of the devices at the venue can easily be set from the production hub, which may be thousands of miles away.

Variations

In broadcast, as in life, things are never as simple and straightforward as an author would have you believe. Think of the Remote Audio Control Room (RACR) in Germany, for instance, a fixed location where live Dolby Atmos 5.1.4 audio mixes are performed to complement the video production created inside an OB truck at the venue. Is this still a remote production given the OB truck at the venue? Technically, it is: the audio signals are mixed on an mc² console that is nowhere near the venue where the audio is ingested.

A remarkable aspect of this remote approach is that the audio streams do not even travel from the OB truck to the RACR in Darmstadt in order to be processed. The latter would mean that individual audio streams are sent one way, mixed and the mixed stems are sent back the other way. The mc² console acts as a sophisticated remote control for the A__UHD Core that does the audio processing. It sits in a flightcase next to the OB truck; and hence conveniently close to the video side of the overall TV production. In effect, the sound supervisor at the RACR only hears the result of their mixing decisions.

What is the benefit of a remote production approach to audio mixing if there is an OB truck at the venue anyway? Immersive 5.1.4 mixes are delicate beasts that require more physical space than the audio section in an OB truck can provide. The sound supervisor needs a quiet, controlled listening environment as well as room for 10 speakers (5.1.4.) and enough air between their ears and the speakers.

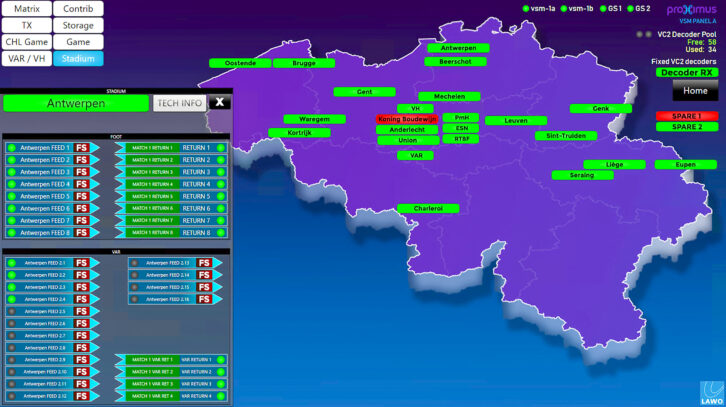

Another remote variation involves broadcasters and service providers with two (or more) distributed, but fixed production hubs; one in Antwerp and one in Brussels, for instance, or one in Oslo and another one in Bergen. Operators in either city can access the processing functionality in the other as well as perform video switching and audio mixing from their control room, and thus contribute to a production in the other city. Thanks to the power of SMPTE ST 2110-based IP networks, including more operators from a third location, or using processing power and operating skills in Brussels for a show produced in Antwerp, for instance, is also possible.

Resilience counts

Separating the mixing console from the processing unit and the I/O stageboxes was a momentous step towards improving flexibility (see the RACR example above) and redundancy. Building WAN communication support into all these devices through SMPTE ST 2110 and RAVENNA/AES67 compliance led to great expectations regarding resilience.

A recent redundancy test involved one A__UHD Core in a sporting arena in Hamburg, and a second in Darmstadt (RACR), which acts as a back-up unit. The team successfully demonstrated that if the core in Hamburg, controlled from an mc² console near Frankfurt for the live 5.1.4 broadcast mix, becomes unavailable, the core closer to the console can immediately take over. The physical location of the second core is irrelevant, by the way, as long as it is connected to the same IP network, and as long as all relevant devices support ST 2022-7 redundancy.

WAN-enabled redundancy is an important building block for a solid resilience strategy. It allows for so-called ‘air gapped units’, i.e., hardware in two separate locations, to ensure continuity when the ‘red’ data centre is flooded or on fire: the redundant, ‘blue’ data centre automatically takes over.

A second strategy for flexible redundancy is to decentralise what used to be in one box. For maximum resilience, one device should do the processing, while a COTS server or dockerised container transmits the control commands it receives from a controller to the processing core, and a switch fabric does the routing. Separating control from processing and routing, and making all three redundant minimises the risk of downtimes in remote, distributed, and at-home production setups. All devices or CPU services can be in different geographic locations.

Resilience necessarily includes control. VSM achieves seamless control redundancy with two pairs of COTS servers stationed in two different locations and automatic fail-over routines. Hardware control panels are not forgotten: if one stops working, connecting a spare, or firing up a software panel, and assigning it the same ID – which takes less than a minute – restores interactive control. (The control processes as such are not affected by control hardware failures, by the way.)

A genuinely resilient broadcast or AV network is a self-healing architecture that always finds a way to get essences from A to B in a secure way. The same applies to HOME Apps that can run on any standard server anywhere on the network – even in the public cloud – and be spun up in a different part of the world in the event of a server or network failure.

Users may not know, or care, where those locations are, as long as the tools they use to control them do. And they quickly find alternatives to keep the infrastructure humming; whether in a remote- or distributed-production setting, or at-home configuration.