Over the last decade, intelligibility on TV has been quite the hot topic.

A few years ago, it was even given its own word when “mumblegate” became part of the vocabulary to describe television dramas that were difficult to hear. These programmes caused outrage across the spectrum, but there is one group of people for whom it is a more serious issue.

According to the World Health Organisation, more than a quarter of people older than 60 are affected by hearing loss, and research from Statista’s 2021 report states that 19 per cent of Europe’s population is over 60 years of age – more than any other geographic region.

That’s a lot of people.

Our consumption of content has also exploded, with media on a wider range of devices in an even wider range of locations; we can enjoy programming while we are at work, at the gym or on the bus. It’s only a matter of time before the issue raises its muffled head again.

But there’s an element of Next Generation Audio (NGA) that may provide some clarity, and MPEG-H might provide the key to unlocking it.

Making it personal

NGA is a term that covers several audio enhancements, but unlike its headline-grabbing immersive sibling, personalisation isn’t about making things bigger; it’s about making things more accessible and giving viewers more control over what they hear. The ability for viewers to independently adjust things like commentary, language and crowd noise can all be on the table.

At this year’s IBC Show in Amsterdam, Salsa Sound and Fraunhofer IIS provided a world-premiere look into how this might work, demonstrating a complete end-to-end automated workflow for personalising live sports audio.

Five years in the making, they did it by enabling Salsa’s MIXaiR production tool to author Fraunhofer’s MPEG-H popular codec.

What is MIXaiR?

“MIXaiR is a production tool that uses AI to completely automate the complex audio workflows of live sports broadcast,” explains co-founder and director of Salsa Sound, Ben Shirley.

“Mixing live sports audio for broadcast has always been resource-intensive, with the mixer constantly manipulating console faders to ensure the mic nearest the ball is always in the mix, whilst managing the balance of this mix against the crowd and commentary, and remembering that the output may be in multiple output formats like stereo, 5.1 or even immersive!” he adds.

“MIXaiR manages all the available inputs and microphone feeds and can create multiple audio mixes in real-time. For example, multiple commentators, crowd mics, studio presentation mixes and pitch-side mics can be grouped to create different outputs for multiple audiences, such as home, away or alternate language mixes, as well as to platform-specific formats and loudness requirements.”

It does this by assigning incoming feeds as audio objects and applying the desired relative loudness of each element. In this way, the AI engine can output different groups and different loudspeaker configurations.

Salsa’s development with Fraunhofer means that they can also be authored as MPEG-H outputs.

What is MPEG-H?

MPEG-H was standardised in 2015 and is increasingly popular – in fact, it’s key to a number of international broadcast standards. It is included in the ATSC, DVB, TTA (Korean TV) and SBTVD (Brazilian TV) TV standards and Fraunhofer works with CE manufacturers like LG, Samsung, Sennheiser and Sony to develop consumer products with MPEG-H support.

“There are many elements which make up the MPEG-H Audio standard, but MPEG-H Part 3 is all about 3D Audio,” says Yannik Grewe, senior engineer of audio production technologies at Fraunhofer IIS.

“It enables immersive and spatial audio as channels, objects or ambisonic signals, as well as universal delivery over any loudspeaker configuration. In short, MPEG-H is an audio standard with features designed to provide viewers with the flexibility to adapt the content to their own preferences, regardless of the playback device.

“This means that in addition to MPEG-H Audio being adopted by various CE manufactures, it has also gained interest from production companies, streaming service providers and broadcasters, and Fraunhofer is working with multiple industry partners like Blackmagic, Linear Acoustic and Jünger Audio.”

Salsa Sound is also on that list and the desire to automate personalisation saw collaboration between the two companies ramp up at the start of 2022.

“The aim was to make it easy for broadcasters to produce live content that makes use of the personalisation of MPEG-H,” explains Shirley. “The integration involved creating an additional output group type which provides an authored MPEG-H output and control of all metadata. In a standard SDI-based infrastructure the MPEG-H output consists of a total of 16 channels; one channel carrying MPEG-H metadata and 15 channels of audio which can consist of whatever channels, presets or groups are required to allow viewers to switch between different audio options. These can be sound beds, objects or groups such as commentaries, different languages or crowd mixes.”

What was on show at the Show?

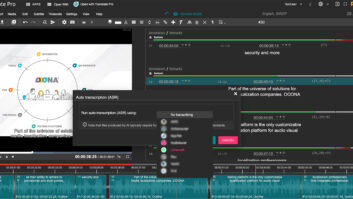

The IBC demo showcased both ends of the production process.

At the production end a DAW played out raw microphone feeds from a football match into MIXaiR which mixed pitch-side mics, managed commentary levels and crowd mixes, and output them as either channel beds or audio objects with MIXaiR-generated MPEG-H metadata. The 16 x MPEG-H audio and metadata channels were encoded in a broadcast encoder and sent to an MPEG-H-compliant set-top box on the other side of the stand.

“The signals were defined as MPEG-H Audio components and user interaction was enabled,” says Grewe. “This resulted in three presets for user interaction: a standard TV mix, an enhanced dialogue mix and a no-commentary mix. We also provided the ability to manually adjust the prominence of commentary in relation the stadium atmosphere.

“The automatically generated digital audio channels were embedded with the corresponding video and fed into the MPEG-H encoder. In the “home” environment, the set-top box received the encoded signal over IP and viewers were able to select their preferred audio option using a standard remote control.”

This level of MPEG-H integration means that MPEG-H metadata could be authored to create a complete scene ready for encoding and transmission, with all the end user-personalisation features that the format enables.

The game is afoot

“Some service providers already offer a second audio stream to select between an audio mix with and without commentary, but this implementation is very limited,” adds Grewe. “With MPEG-H such user interaction can be achieved without the necessity to switch streams.”

Which brings us full circle and back to mumblegate. Personalisation can provide the ability to give people with different requirements access to the same content, and AI could help do this without piling additional pressure onto production staff.

“I’ve always considered personalisation to be the greatest benefit of NGA. Not everyone wants immersive audio setups or soundbars in their home, but everyone watches media in a different environment, on different equipment and with different individual preferences and needs,” concludes Shirley.

“Object-based audio facilitates access – the mix may have enhanced dialogue for those with a hearing impairment, and an audio object could even be an audio description for visually impaired viewers.

“The possibilities are huge for increasing both engagement and accessibility,” he concludes.