There’s no doubt everyone is talking about artificial intelligence and its impact on pretty much every aspect of technology at the moment.

It’s an area that we’re seeing lots of discussion around in the media industry, not least because of the current Hollywood strikes. Among the issues the two sides are at odds over is the use of AI in both writing content and acting.

But what impact could AI have on the news we watch? At a time when many broadcasters are striving to move as far away from ‘fake news’ as possible, could AI be beneficial to news production?

Sky News recently created and tested its first-ever AI news reporter to experiment whether generative AI could replace the role of a journalist.

It used AI to write a 300-word news article for Sky News’ website as well as an accompanying 90-second TV report.

The idea grew out of a discussion within Sky’s newsroom, Tom Clarke, science and technology editor for Sky News, tells TVBEurope. “There was a lot of hype around these large language models like ChatGPT, their incredible capabilities and the potential risks that those capabilities posed,” he explains. “There is this other angle of how AI might replace the jobs we do. We wanted to test all of those things so we thought, why don’t we test it doing the job of a journalist and push these technologies as far as we can?”

In order to complete the project the Sky News team used “off the shelf” components rather than writing and training their own AI. The key aim, says Clarke, was to visualise and unpack some of the more esoteric or abstract ideas around AI for viewers. The team began by using ChatGPT as both a journalist and editor.

The project employed a web crawler, built by Sky News, tasked with searching specific news websites and feeding that information into ChatGPT in order to train it.

“Anybody can ask ChatGPT etc to write a news story on any subject and it will do a remarkably good job generally. But it’s not really doing the job of a journalist,” says Clarke. “Our idea was to set the chatbots up to play off each other, to sort of simulate a thought process.”

That meant one bot was given prompts in order to “report” and the other was used as an editor. The “reporter” generated a story idea based on the inputs Clarke and the team gave it. The “editor” was given a different prompt, which was to critique the story and decide whether or not it was relevant. “We gave it various prompts, relevance, newsworthy, timely, whatever, and then ran a number of cycles to and fro between the reporter and the editor,” he continues. “It’s almost like simulating a thought process is how I understood it myself. They’re both powered by the same language models, so they haven’t got different brains behind them, but then you give them different prompts to try to hone that task.”

The team also used Stable Diffusion, which can generate images using AI. Without human intervention, the editor chatbot generated a prompt based on the news story that was sent to Stable Diffusion in order to create an image. “We didn’t tell it what that prompt should be, it had to come up with its own so,” explains Clarke, “and then it would automatically make that request and the image was downloaded to one of our computers.”

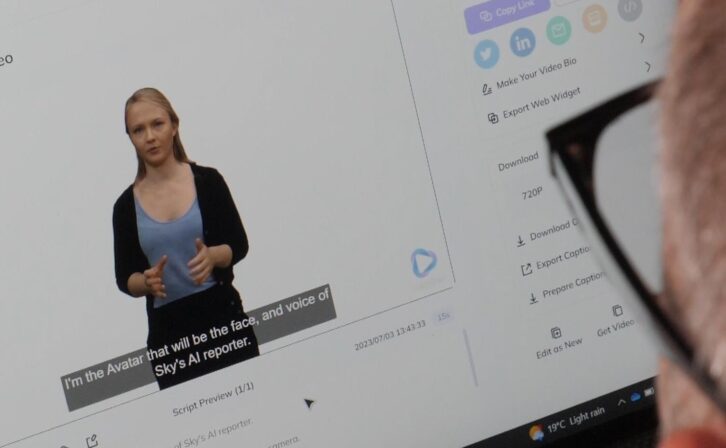

AI was further employed to create an on-screen reporter for the news story. “We thought wouldn’t it be fun to use one of these AIs that generate an avatar, a human-like face,” Clarke continues. “We used a company based in California because we looked around and theirs was the most realistic. We trained it with a few minutes of my colleague reading out old scripts of mine, nothing specific, and then manually gave it the text generated by ChatGPT.”

In total, the project took around a month to complete – with much of that time spent training the chatbots to write like journalists (never the easiest of tasks).

Clarke and the team wanted to show viewers the whole process of how they put the report together, and so they felt it was key to show where humans were specifically involved, which turned out to be predominantly in the edit suite.

“There were moments when we were working with it and figuring out how to make this into a story when there were five of us in the room trying to make it work,” he says. “So we realised it’s not going to be replacing us anytime soon. It did make me think, there’s quite a lot of human involvement to make sure this thing gives us what we want and probably speaks to some degree to the amount of job replacement we’re actually going to see in the short term.”

The whole idea of using AI to generate images for both the website and TV package also raised questions around integrity. “We work in the news industry,” states Clarke. “Realistically we’d never want to generate fake images to put on the news. We’re in the news business, we’re about telling people what’s really going on. So I think the only way realistically we’re going to be using image-generating AI is to illustrate what’s going on in the world of AI. I don’t think we’d ever use it to generate the images that we put on air.

“Could you use an AI maybe to generate images for explanatory purposes if you really are unable to show that by any other means? Maybe it’s a tool you can see applied there. But how could that be better than a really good graphic designer at the moment? I’m not really sure in news whether AI image generation or video generation has got a place as I currently understand it.”

Clarke says the project did help highlight some areas where artificial intelligence could be useful, such as archiving, transcribing incoming video feeds etc. “You can imagine an AI transcribing the audio into text, but also cataloguing pictures which makes them much easier to find in a 24 hour breaking newsroom. That could save a lot of time and speed things up.

“But, we also found it makes mistakes, and it is sometimes very confident in the mistakes it makes. It was certain that milk spilt on motorways makes them safer. So, are we at this point yet where we could trust what it’s doing even on those simple tasks? I think you’d have to be really really confident in your system and have run it in parallel with your existing workflows for a long time before you would risk that transitioning.”