Disaster recovery or business continuity systems are a necessary expense for most broadcasters in order to ensure that services can be continued if a major incident occurs at their main playout centre. Disruption to services could be caused by natural disasters such as floods, earthquakes, hurricanes, and pandemics as well as un-natural disasters such as wars, riots, power outages, fires, system hacks and anything else that can prevent access to the normal playout centre or playout system.

The issue that many broadcasters face is deciding how much should be spent on such a Disaster Recovery (DR) system to protect their playout. The ideal from a service continuity perspective would be to set up a second system using the exact same technology as the main system and to run it in parallel. Then if a major incident occurred it would be relatively easy to switch over to the redundant system and continue. This however is very costly, especially if running on-premise as it would involve the cost of the redundant system, the cost of a second building far enough away from the main playout centre so that it won’t be affected by the same disaster situation, the cost of heating, air conditioning, lighting, maintenance and staff costs. Unfortunately, not many broadcasters can afford this sort of investment to protect against something that might never happen. But are there other less expensive ways to provide a high level of protection and peace of mind?

This article looks at what kind of strategies could be adopted to provide a compromise between continuity of service and costs. It discusses the types of configurations that are now possible and how the cloud’s elasticity can be of benefit.

What is an acceptable recovery time?

Each broadcaster will have a different view on how much downtime is acceptable between the time a major situation occurs and when the station is back on air. This will depend partly on how much they are willing to spend on DR and partly on what their attitude is to risk and service reliability.

For some broadcasters, it might be acceptable in the case of a very rare event to be off air for a few hours and in the first instance to play content via an evergreen playlist until the disaster recovery system can be spun up. Media and schedules can then be uploaded and playout can resume roughly in time with the original schedule, albeit after a break in service. For others, the concept of playing evergreen media is not acceptable and the system must be able to resume playout exactly in synchronisation with where the main system would have been within around 30 minutes. For some tier 1 broadcasters, no downtime can be tolerated at all.

How the cloud can help

One of the benefits of the cloud, when a system is architected properly, is the elasticity it offers. Resources can be spun up when needed and then taken down again when no longer required to save costs. Systems can be deployed easily using templates and resources can be assigned as and when needed. It is also possible to run core components, carrying the necessary configuration parameters, using only a relatively small amount of resources, so that the launch time is minimised when the remainder of the system is required. In addition, the cloud can be accessed from anywhere in the world via a browser using secure connections, which is a very useful feature if a disaster occurs that makes travel or access to buildings difficult.

But to get the maximum advantage of hosting in the cloud, the solution has to be cloud native. A modern cloud-native product would be designed using microservices, containers, and orchestration. Rather than one large monolithic application, there are a large number of smaller services that run in containers and are deployed and orchestrated by an orchestration layer, such as Kubernetes. The advantage is that you get the resilience and elasticity that the orchestration provides.

But how can costs be saved?

Running playout in the cloud offers many potential cost savings. Apart from the obvious savings on the expensive on-premise hardware and the maintenance that goes with it there are savings on equipment rooms, air-conditioning, lighting and the personnel to manage all the infrastructure. Instead, the software is spun up in the cloud and configured to meet the needs of the channel. This includes setting up the bit rates, frame rates, formats, graphics, subtitling, I/O and so on.

It is very easy to launch new channels in the cloud. Some systems can actually deploy new channels in minutes. That’s very different from the several weeks, even months of planning, rack layouts, wiring, commissioning and so on as it used to be done with older on-premise systems. It’s also just as easy to decommission channels when they are no longer required. This means you only have to pay for infrastructure that you use and not what you don’t use, usually on a Pay-As-You-Go (PAYG) basis. This greatly reduces the risk of new channel launches and is ideal for DR channels.

Possible DR configurations

One low-cost solution would be to have a preconfigured system as a cold standby, either on-premise in another location, or in the cloud. If a disaster occurs the DR system can be started, media uploaded and channels played. The advantage of this method is that the cost would be quite low. Apart from the initial system cost, there would little running cost until the system is needed. Hosting in the cloud is likely to be cheaper than hosting on-premise, as there would be almost no initial capital outlay and no space costs. The disadvantage of course is the time it would take before the system would be ready to take over from the main system. There is also the issue of having to make sure that the media and playlists are available to the DR system, just in case the main system went down, so there could be some cost in ensuring that happens. Synchronising with the original playlist would not be easy and would have to be done manually.

An alternative would be to run a system in parallel with the main playout system. Ideally, this would be synchronised with the main system electronically so as to avoid the operational costs of operating a second system. But in any case this would be an expensive solution, whether it is hosted in the cloud or on-premise. For some broadcasters, this would be the only option, but for most a cheaper alternative is required that does not take hours to activate when needed.

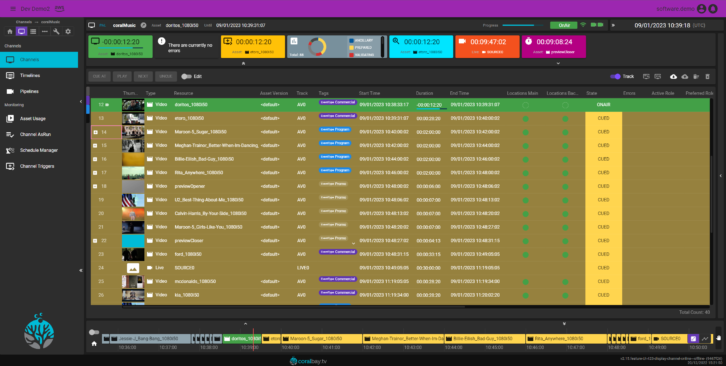

Another possibility would be to have a DR system hosted in a public cloud that had the minimum resources running to keep costs down. When needed it will spin up the necessary resources and start playing out. coralPlay is able to run playlists even when the playout devices are not available. This means that the most compute-hungry resources do not have to be online until they are needed, saving not just the compute costs but also the egress fees which can be the most expensive element of playing out TV channels from public clouds. By playing out the playlist, synchronisation is maintained with the main playout system. If a disaster occurs, it is very easy to initiate deployment of the playout servers which replicate in software the functionality of traditional hardware products, providing video server, master control switcher, DVE, graphics, subtitling, scaling, SCTE triggering and many other functions. Spinning up these hosts up takes approximately 10 – 15 minutes. Once up, coralPlay’s media management starts to move media from deep storage to the playout hosts and this is done by earliest on-air time across all channels, so that what is needed first is processed first.

It is also possible to play directly from object storage rather than to cache to the playout hosts and in a disaster scenario this saves time. This does of course assume that the media that is required to run the channels is uploaded to object storage in the cloud in advance, as it would be for any normal playout. Some playout systems can automate this process to minimise the operational load on the playout operators.

One other very important feature is the ability to automatically join in progress at any point in the programme in order to ensure synchronisation with the original schedule. When the video pipelines are spun up and the media is detected, coralPlay cues the current event at an offset from its start and schedules it to play in the future so that when is starts to play it will be exactly in sync with the playlist and at the same point it would have been had the resources been there all the time. The next event is also cued ready to go when the current event ends.

To ensure accurate synchronisation with the main playlist at the main playout centre it is possible to continually read in any changes. This could be done by reading in regular playlist updates and regularly replacing the playlists in the DR. With some systems it is also possible to read in dynamic updates so that the DR system follows the main system exactly. One issue with this approach though, is making sure that an update from a failed or failing system does not compromise the on-air schedules in the DR system.

Conclusion

Disaster recovery systems are a necessary expense for most broadcasters, but the cost of a backup system located in another building is not a feasible option for most channels. Using a cloud-native playout product it is possible to provide a much lower cost DR in a public cloud, whereby channel playout can automatically resume exactly in time with the original schedule in less than 30 minutes. The running costs of such a system can be very low until the system is used when there is a disaster.