Retrospective re-purposing of content for new media channels will saddle broadcasters with a financial loss, warned Kevin McCartney, senior VP of sales at digital distribution specialist Anystream at a recent seminar. “New revenue models require a systems-based approach, because manual processes will not scale as the level of demand or content increases,” said McCartney, speaking at a ‘Real Profits from New Digital Platforms’ seminar in London organised by Marquis Broadcast, writes Richard Dean.

In a point echoed by fellow presenters Dr Peter Thomas, CTO at media asset management company Blue Order, Omneon Video Networks Senior Solutions Director Jason Danielson and Granby Patrick, Partner Director at Marquis Broadcast, McCartney said the key tenet of this approach was the early generation and retention of metadata.

“A lot of information known at the production stage is simply discarded,” said Patrick, “yet the cost of keying-in information once at the ingest stage is negligible compared to the efforts required to retrieve it later during post production. Inefficiencies may be hidden if they are spread across different departments, but are still suffered by the company as a whole,” he said, adding that scrimping on metadata requirements from production contractors was a false economy.

Examples include the position of fade-to-black cuts added during editing to accommodate ads, programme makers and performer details, and even the programme synopsis. Such metadata allows content to be cost-effectively re-purposed with automated processes. But all too often the information is redefined or re-keyed in separate downstream processes, significantly increasing costs.

New media is here to stay, said Patrick. “Any broadcaster claiming that they will only be supporting certain distribution channels is metaphorically sitting on the beach telling the tide to go back,” he told delegates at the free half-day event.

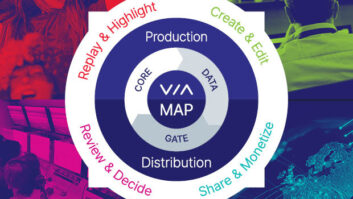

Identifying more efficient procedures for the four stages in programme creation – Ingest, Production, Archive and Distribution – Thomas detailed a regime that applies newsroom techniques to general programming, with a unique job ID assigned at the scripting stage, desktop browsing combined with craft editing, and a return loop to archive.

“Retrieving suitable footage from archive costs a fraction of taking a crew out to shoot something new,” Patrick noted. “Harnessing archive power however requires universal media access. It’s crazy that some people working in TV can only see past programmes by physically visiting the library.”

According to Danielson, the best way to achieve this was with centralised storage, adding that the few extra seconds required to create low-res proxy files from ‘air quality’ files (rather than in parallel at ingest) was essential for desktop users to know with certainty what footage is available. Centralised storage also reduced bandwidth constraints, as each spoke to the hub actually added more capacity.