Researchers in the Camera Culture Group at MIT’s Media Lab have developed a system that can convert a conventional camera into a light-field camera capable of producing a 20-megapixel multi-perspective 3D image from a single exposure.

The development is part of growing research into computational photography, the best known example of which is the light-field camera from Lytro.

Light-field cameras capture more information from the visual environment than a conventional camera, recording not only the intensity of light rays but also their angle of arrival. This information can be used to perform all sorts of post-production operations on the image including refocussing or multi-perspective 3D pictures.

MIT’s advance, called Focii, will be presented as a paper at Siggraph later this month. Instead of reliance on hardware, Focii is described on the Media Lab’s website as using “a small rectangle of plastic film, printed with a unique checkerboard pattern, that can be inserted beneath the lens of an ordinary digital single-lens-reflex camera. Software does the rest.”

Gordon Wetzstein, one of the paper’s co-authors, is quoted as saying that the new work complements the Camera Culture Group’s research on glasses-free 3D displays.

“Generating live-action content for these types of displays is very difficult,” Wetzstein said. “The future vision would be to have a completely integrated pipeline from live-action shooting to editing to display. We’re developing core technologies for that pipeline.”

This is achieved by using a novel way to represent light fields as a patchwork grid. “Focii represents a light field as a grid of square patches; each patch, in turn, consists of a five-by-five grid of blocks,” it stated. “Each block represents a different perspective on a 121-pixel patch of the light field, so Focii captures 25 perspectives in all.”

It added: “A conventional 3D system, such as those used to produce 3D movies, captures only two perspectives; with multi-perspective systems, a change in viewing angle reveals new features of an object, as it does in real life.”

You can view more here.

Added dynamic range

Earlier this year a team at MIT’s Microsystems Technology Laboratory showed a microprocessor it had developed that could instantly add high dynamic range to images shot by smartphones, tablets or digital cameras.

According to an MIT report the chip “automatically takes three separate low dynamic range images with the camera: a normally exposed image, an overexposed image capturing details in the dark areas of the scene, and an underexposed image capturing details in the bright areas. It then merges them to create one image capturing the entire range of brightness in the scene.”

Where existing software-based systems typically take several seconds to perform this operation, the chip can do it in a few hundred milliseconds on a 10-megapixel image, meaning it is even fast enough to apply to video. The chip consumes dramatically less power than existing CPUs and GPUs while performing the operation.

Other advances in computational photography presented at Siggraph include:

Femto-Photography, again from MIT, which is described as an ultrafast imaging technique to overcome the challenges of capturing and visualising light in motion.

Another paper: ‘High-Quality Computational Imaging Through Simple Lenses’ seeks to replace complex, commercial optics by uncompensated, simple optics and proposes a post-capture method of processing that removes the optical aberrations that result from using such simple optics (this from the University of British Columbia).

There is also a ‘Reconfigurable Camera Add-On for High-Dynamic-Range, Multi-Spectral, Polarization, and Light-Field Imaging’, a theoretical optical design for a camera add-on that can be configured to enable acquisition of different plenoptic dimensions without permanently changing the camera (this from the Max-Planck-Institut für Informatik and others).

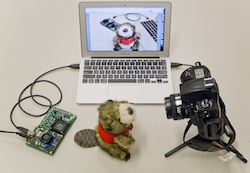

Picture: A setup of a demonstration light-field system that integrates the processor with DDR2 memory and connects with a camera and a display via USB. The system provides a platform for live computational photography.

By Adrian Pennington