To take advantage of new distribution opportunities and fully monetise their content libraries, media organisations need to be able to format content to reach the widest possible audience.

Converting source material or even the final master to be compliant with geographic, platform or device comes under the umbrella term of standards conversion. The actual and most important standards we are converting are around the picture (or frame) size, the dynamic range, colour space and frame rate.

Conversion of the frame rate is often a neglected consideration, but it does govern how the viewer will perceive motion. The reality is that good frame rate conversion will not be noticed but bad frame rate conversion can ruin a programme.

Until recently the content production, post and distribution teams only needed to worry about a handful of frame rate standards — traditionally 23.98, 24, 25, 29.97, 50, 59.94 and 60 Hz. Even this collection presents a challenge in terms of handling mixed content within a project or simply the need to format the end product for delivery via cinema, linear TV, VoD/OTT, web, and myriad of mobile end devices.

The introduction of high frame rate (>60hz) and even user-generated content (UGC) has in many ways made the situation even more complicated. So today, standards conversion is no longer limited to bridging the differences between film and TV frame rates.

As you might expect, more complex conversions require more sophisticated techniques to preserve both picture quality and smooth motion playback. In the context of frame rate conversion, complexity is largely about the numerical relationship between the source and output frame rates, as well as the inherent motion within a sequence. This is where motion compensated interpolation is widely regarded as providing the best results.

The only way to ensure smooth motion and a crisp, clear picture through frame rate conversion is to analyse adjacent frames, detect and calculate the movement of objects within the scene, and create new frames with appropriate object placement and display timing. This is how motion compensation places a moving object in the right place, at the right time, and on the right path while converting content from one frame rate to another. This approach overcomes the temporal and spatial differences by generating new frames — still images — that very closely represent what the viewer would see if the content had been captured at that rate.

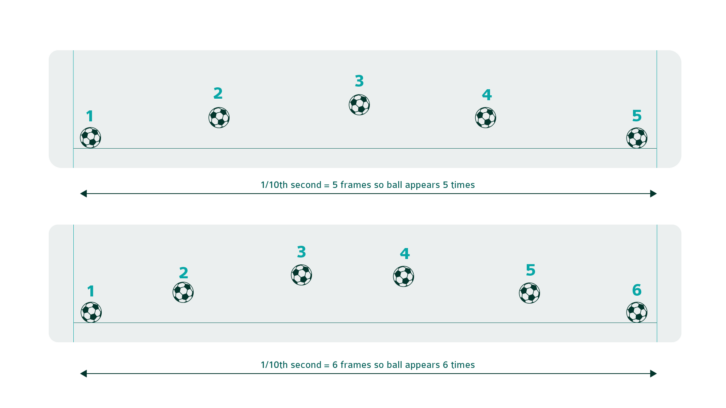

The above image illustrates motion compensated frame rate conversion from 50 frames per second (fps) to 60 fps in simple terms. The top image represents five frames (0.1 sec) of 50 fps video, and the moving football appears in each of these five frames. As the bottom image demonstrates, conversion to 60 fps results in six frames, or six appearances of the ball within the same period.

So somehow, we have gained a frame. If we achieved this by duplicating any single frame, then the position of the ball would not move in the new frame. This and the shorter timespan between the frames this would result in a jerky, uneven motion.

Motion compensation makes it possible to insert new frames in which the ball appears in a predicted location at the time when the new frame occurs. We can also preserve original frames which occur at the same temporal position or scene changes.

Conversion in the Cloud

The availability of Cloud services has released broadcasters from the shackles of inflexible and costly Capex-based infrastructures. They can now choose the Cloud to host their operations or access third-party media services.

Most media organisations are doing both as costs can be better managed by paying for what you actually use — and only when you need to use it. There are also “economies of scale” advantages to be had as Cloud providers offer attractive pricing models for high-demand consumers.

Until recently, high-quality motion compensated frame rate conversion of file-based or live content was one of the few remaining gaps in a Cloud services offering. The availability of software-based conversion using CPU-only resources has changed that. Thanks to virtually unlimited CPU processing power, they can quickly and cost-effectively scale content processing.

InSync has a partner approach to Cloud services. Our mission is to make some or all of our unique conversion capabilities available within leading cloud services platforms. This is where our flagship FrameFormer software engine comes in!

Real-World Implementations

InSync FrameFormer provides the best in motion compensated frame rate conversion. It can be easily integrated via its open API into any part of the media production chain but most commonly sits within file-based transcoding platforms. FrameFormer uses only CPU resources and so is ideally suited to Cloud deployment for the reasons of hyper-scalability explained earlier.

InSync has engaged with prominent trailblazers in the media cloud services space and currently Frameformer conversion is available from three partners; AWS Elemental, Dalet and Hiscale.