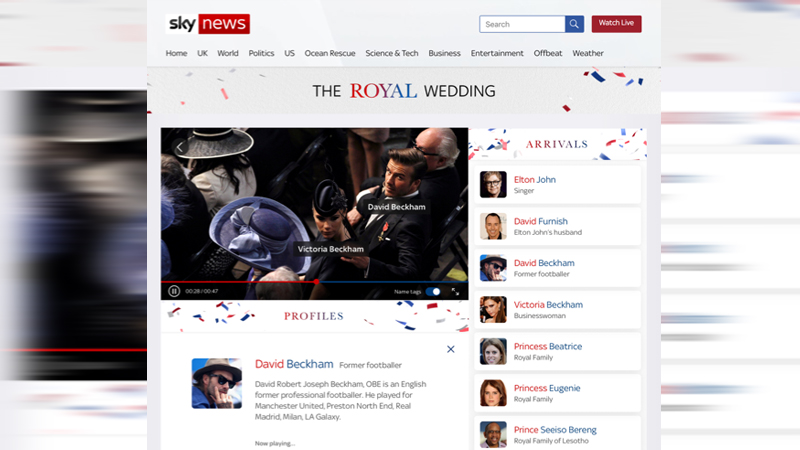

Sky News will use pioneering cloud-based machine learning and media services to name guests as they enter the chapel ahead of the Royal Wedding on 19th May.

Accessible in the Sky News app or via skynews.com, the Royal Wedding: Who’s Who Live functionality uses machine learning to enhance the user experience and enrich the video content with relevant facts about the attendees.

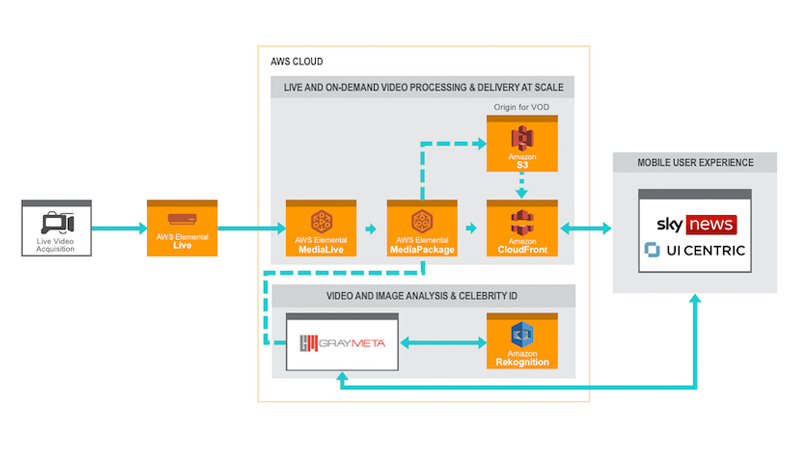

To deliver this service at scale, Sky News is collaborating with Amazon Web Services (AWS) and two AWS technology partners, GrayMeta and UI Centric. As guests make their way into St George’s Chapel in Windsor, AWS will capture live video and send it to cloud-based AWS Elemental Media Services for multiscreen viewing optimisation. An on-demand video asset, including catch-up functionality, will also be generated.

In a bid to find out more about the technology, TVBEurope spoke to Keith Wymbs, CMO of AWS Elemental (formerly Elemental Technologies), who provided some more information about the venture and the motivations behind it.

“The main point of context is that, in the video industry, it’s becoming clear, I think to most folks involved in the distribution of video, that having an amazing and differentiated customer experience is really important for their long term outlook, and so there’s definitely lot of experimentation going on,” says Wymbs.

He believes this particular application, the Who’s Who app, is just an example of another level of experience that really wasn’t possible before, because historically it would simply take too many people to do it in real time.

“It’s a difficult thing to do when you don’t know the exact order of arrival etc,” adds Wymbs. “You’re conveying as much information as you can via the commentary but frankly that’s not a huge level of depth.

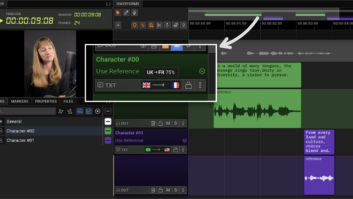

“What’s going on here is we’re essentially taking what was originally just image recognition technology, taking the video streams that are coming from Elemental Media Services, and analysing those in real time based on pictures that have been uploaded to the Amazon Rekognition machine learning service, which is then combined with metadata to provide a depth and customisation of experience that’s great and completely different for the viewers.”

As attendees of the Royal Wedding come into the video, they are identified, which ties into deeper metadata via the GrayMeta service and then displays within the app, allowing the viewer to be fed additional context beyond what would be possible in the five to ten seconds of commentary that would be provided in a typical red carpet scenario.

Wymbs believes there are all kinds of applications for the technology, and has already seen it employed in the world of sports.

“There’s enough close-up shots and enough in terms of fidelity to be able to cross-reference pictures of players to identify who they are,” explains Wymbs. “With something more race-orientated like the Tour de France, which has collapsed periods of coverage that focus on the leaders or really specific climbs or sprints, people only care about their favourites and would rather get a more personalised experience. It’s hard for the commentators to cover all the different possibilities of what the audience would like to engage with.

“When you start to do image and video recognition specifically, you are able to automate some of this capability, understand where these individuals are in the video and provide a level of depth which doesn’t require as much of a human interface to create.”

Wymbs even describes government agencies starting to use the technology to identify different senators and congress people who are giving speeches that are broadcast in a public forum environment.

“It’s important to remember that this really wasn’t possible before some of the infrastructure and capability moved to the cloud,” Wymbs continues. “If you were going to do something experimental like this app, you don’t really know what the demand is going to be or how much effort it’s going to require, so there’s a big risk in that sense. With the cloud, its essentially pay-as-you-go, so companies need not act so frugally, and with social media nowadays we’ll know exactly how it’s being received almost immediately.

“Having the cloud there to be able to scale to whatever the demand is allows you to align the potential with the underlying infrastructure in a real-time sense, and that elasticity is very important in addition to the capability of the video recognition technology itself and its combination with metadata to enhance the user experience.”